Meta launches AI model Llama 4, claims “world’s highest performance,” ready to compete with DeepSeek

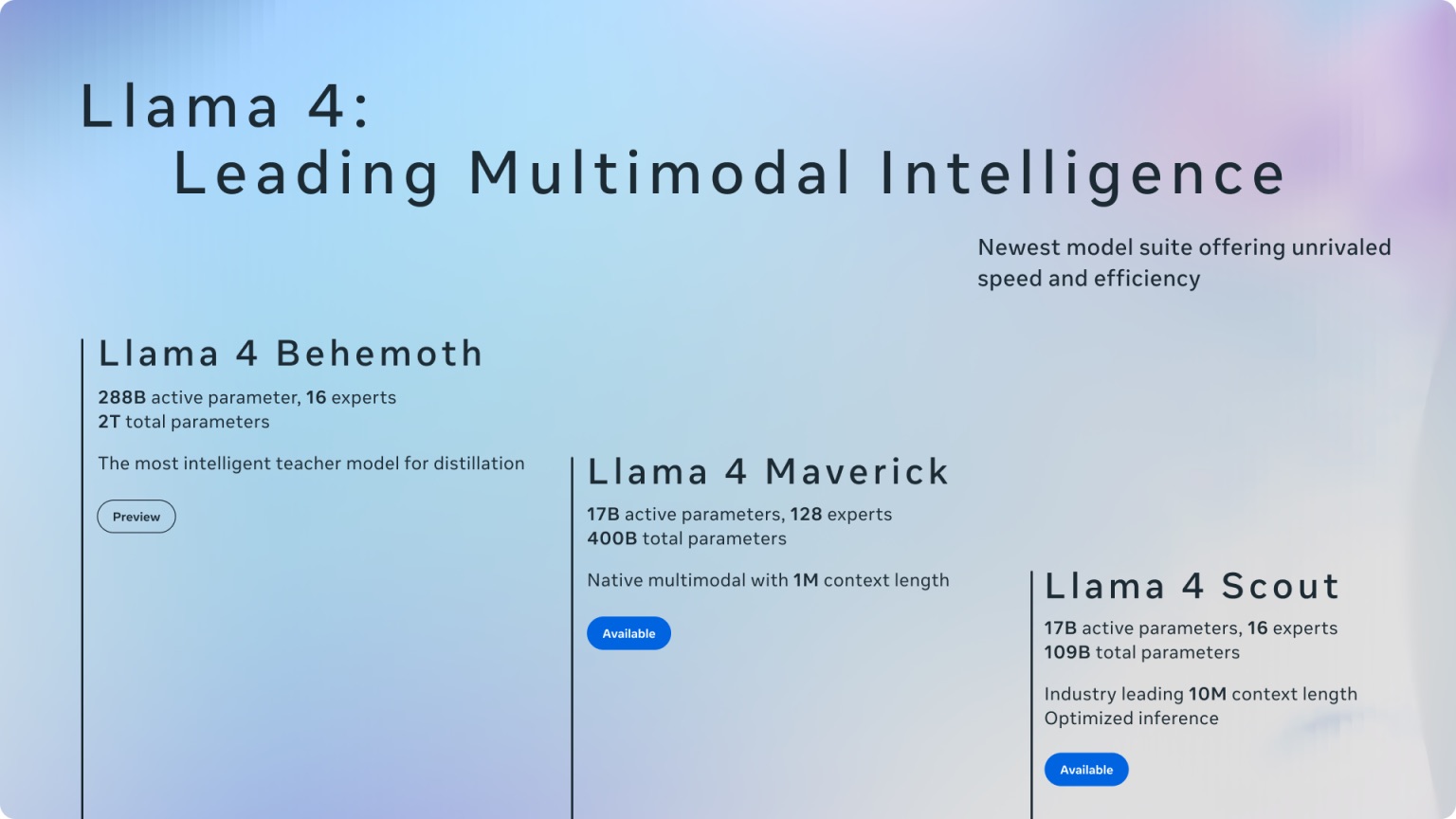

Llama 4 includes three models: Llama 4 Scout, Llama 4 Maverick, and Llama 4 Behemoth.

Meta introduces AI for filmmaking: relatively realistic, though the lip-syncing is a bit stiff but still acceptable

The moment I got LAID OFF by tech giant Meta, I woke up: An unexpected shock, but also a chance to be ‘reborn’!

Meta has just announced Llama 4, its latest collection of AI models, now integrated into the Meta AI assistant on the web as well as in WhatsApp, Messenger, and Instagram apps.

Two of the new models – Llama 4 Scout and Llama 4 Maverick – are now available for direct download from Meta or via the Hugging Face platform. Scout is a smaller model that can run on a single Nvidia H100 GPU, while Maverick is larger and is being compared to GPT-4o and Gemini 2.0 Flash. Meta also stated that it is continuing to train the Llama 4 Behemoth model, which CEO Mark Zuckerberg claims is “the highest-performing foundation model in the world.”

According to Meta, Llama 4 Scout features a context window of up to 10 million tokens – akin to the working memory of an AI model – and outperforms models like Google’s Gemma 3, Gemini 2.0 Flash-Lite, and the open-source Mistral 3.1 in many common benchmark tests, while still only requiring a single Nvidia H100 GPU to run. Meta made similar claims about Maverick, stating that its performance is on par with GPT-4o and Gemini 2.0 Flash, and even comparable to DeepSeek-V3 in coding and reasoning benchmarks, all while using less than half the number of active parameters.

Meanwhile, Llama 4 Behemoth is the largest model in the collection, with 288 billion active parameters and a total of 2 trillion parameters. Though not yet released, Meta claims Behemoth can surpass competitors like GPT-4.5 and Claude Sonnet 3.7 in many STEM-related (Science, Technology, Engineering, and Math) benchmarks.

For Llama 4, Meta has adopted a “mixture of experts” (MoE) architecture – an approach that conserves resources by only activating the necessary parts of the model for each task. The company plans to share more about its AI roadmap at the upcoming LlamaCon event, scheduled for April 29.

As with previous versions, Meta calls Llama 4 open-source, though it has faced criticism regarding its licensing terms. For example, commercial organizations with over 700 million monthly active users must request permission from Meta before using the model – a condition which, according to the Open Source Initiative, means Llama “no longer qualifies as truly open-source” by traditional definitions.